Six degrees of freedom

Let’s start with this common phrase. Our world consists of three spatial dimensions: breadth, width, and height. The word freedom refers to free movement in these three dimensions.

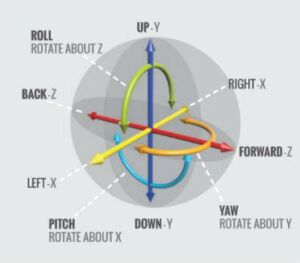

When we talk about movement, we mean both changing location by moving along an axis through space (known as translation) but also doing a rotation around an axis while still remaining at the same coordinates. Consider how you would say you are moving when you are turning around, even though you remain at the same spot.

A camera in motion has six degrees of freedom. Moving forward/backward, up/down or left/right serves as translation. Rotation around an axis is often termed pitch, yaw, or roll, depending on which axis we rotate around. Although some are more prevalent and more strongly affect video quality, video stabilization software has to understand, process and potentially handle all six types of movement. But first it all has to be very precisely measured.

Two key motion sensors with crucial data

Two types of motion sensors are of interest when tracking movement: the accelerometer and the gyroscope. Both have crucial data that is frequently updated.

Accelerometer

An accelerometer detects the g-force associated with the current movement. This sensor is a very tiny chip with extremely tiny (around half a millimeter thin) moving parts made of silicon. Watch Engineerguy’s YouTube video for a fascinating explanation and demonstration of how an accelerometer works in a smartphone.

Gyroscope

The gyroscope is more of an orientation tool for your camera in motion: The roll, pitch and yaw of your device will be automatically detected by the gyroscope. The size of a typical micro-electromechanical gyroscope inside small devices is just a few millimeters.

Frequent motion sensor data updates

Information is pulled from these sensors at least a hundred times per second or faster. Compare this to the normal 30 frame-per-second rate of videos. So, why do we need sensor updates more often than there are new frames in the video? The answer will be revealed later in this post.

How video stabilizers use motion sensor data

Movement can also be computed visually by analyzing movement in the image itself, using methods such as optical flow with tracking technology, as was discussed in the object tracking post. Video stabilization software reads translation and rotation information to calculate the total movement. This enables the stabilizer to modify each frame to create the scene you intended by separating intended motion from unintended motion – keeping only the former and canceling out the latter.

But how do we go from raw data to a motion model?

For all of this to work, the data reported by the sensors must be expressed and analyzed mathematically. Although images are two-dimensional, the world we and our cameras live in is not. We have six degrees of freedom in our 3D world. A translation can easily be stated with a 3D vector, and rotation can be expressed with advanced matrix algebra, or with four-dimensional numbers.

Yes. Just as complex numbers are a two-dimensional extension of real numbers, quaternions are four-dimensional numbers. They can be added, subtracted, multiplied and divided according to their own special rules, consistent with the arithmetic rules of real numbers. It turns out that a rotation in 3D space can be represented with unit quaternions. They provide a more convenient mathematical notation for representing rotations of 3D objects.

They also have the advantage of being easier to compose, and they are more numerically stable compared with rotation matrices, perhaps more efficient, but not as easy to understand for a human. Representing rotations using unit quaternions also avoids an infamous problem in mechanical engineering known as gimbal lock.

Even if this seems straight-forward, the actual computations are not. Sensor data is “noisy”, i.e. comes with a certain degree of inaccuracy, and can only be polled a limited number of times per second. Turning all this data into one meaningful mathematical expression, especially on the low-power hardware typically used in cameras in motion like drones, is a major challenge for video stabilizers. At the same time, hardware can compensate for some of this, as we will see in the next section.